Avoiding AI brainrot

Published by Vlasta yesterday.

In this follow-up post to my holiday special, I wanted to explore the human side of AI slop and a related topic that I call AI brainrot. Here is my opinion on why low-effort AI content feels repetitive, how the use of AI tools affects thinking of people, and what practical steps can help us to avoid losing our brainpower in this age of AI.

Why low-effort AI content becomes slop

A useful way to reframe the problem of AI slop is to stop treating it as a failure of quality and instead see it as a failure of divergence. Content generated with minimal human input feels repetitive because modern AI systems are exceptionally good at converging toward the bland middle ground.

Most widely used models are either the same system or are trained on very similar data using similar objectives. As a result, they share a common center of gravity in style, tone, composition, and structure. When a user provides only a shallow prompt, the model naturally drifts toward what is most statistically typical: familiar phrasing, conventional imagery, and broadly agreeable ideas. This is not an accident, it is exactly what these systems are optimized to produce.

It is worth noting that individual human authors can also be repetitive. Many writers, painters, and composers return to the same themes, motifs, and structures throughout their careers. The difference is that a human's repetition is shaped by a unique set of lived experiences, preferences, and biases, which makes their work distinct from that of other humans, even when it is self-referential. Moreover, human creators are aware of their own past output and often make a conscious effort not to repeat themselves too closely.

AI systems lack both of these stabilizing forces. They have no personal history, no lived experience, and no awareness of what they have already produced in the past. Each generation starts in isolation. If a human author were to lose all memory of the last book they wrote or the last painting they made (while retaining all other memories), there is a good chance they would produce something strikingly similar again. AI operates permanently in this state of amnesia, which makes convergence not just likely, but inevitable unless a human actively pushes against it.

More details

(You can skip this if you are not interested in the inner workings of AI)

Several additional structural factors reinforce this tendency toward repetition, especially when human input is minimal:

- Optimization for acceptability - training objectives favor responses that are safe, correct, and broadly acceptable, rather than unusual or sharply opinionated.

- Underspecified prompts - vague inputs leave large gaps that the model fills statistically, defaulting to the most common and familiar patterns.

- No persistent taste or identity - unlike human creators, AI does not accumulate preferences or develop a personal style across time unless the user explicitly enforces continuity.

- Lack of internal resistance - creativity in humans often emerges from doubt, friction, or constraint. AI systems experience none of these and therefore rarely push beyond surface-level plausibility on their own.

- Scale amplifies sameness - even mild repetition becomes overwhelming when multiplied across thousands or millions of outputs, turning neutrality into noise.

When AI replaces thinking, thinking weakens

Recent studies examining how people solve problems or write essays with and without access to AI tools (while monitoring brain activity) point to a consistent pattern. When participants were given unrestricted access to AI from the outset, they were able to produce results quickly. The outputs were often competent on the surface, but also strikingly similar to one another. Measured brain activity was relatively low, and when asked to explain or reflect on what they had produced, participants frequently struggled to articulate the underlying ideas. More tellingly, once access to AI tools was removed, many of these participants were unable to solve comparable problems or produce similar essays on their own.

By contrast, participants who worked without AI assistance initially progressed more slowly. Their brain activity was higher, indicating sustained cognitive engagement, and their early results were often rougher. However, over time they improved: they became faster at solving similar tasks and more capable of producing structured, coherent essays. The effort invested in grappling with the problem appeared to compound rather than disappear. These results are not surprising at all, but is it good to mention them, because sometimes the most obvious things tend to be the most overlooked ones.

A follow-up variation of these studies offers an important nuance. In this setup, participants were required to first formulate their ideas: outlining arguments, intentions, or problem-solving strategies. Only after that they were given access to AI tools. The results suggest a different dynamic altogether. The initial phase demanded genuine cognitive effort, but that engagement did not drop once AI was introduced. Instead, some participants remained mentally involved while using AI, likely because they had already developed a sense of ownership over the idea. The explanation is intuitive: when a person has invested effort in shaping an idea, the outcome matters to them. AI assistance then becomes a means of fleshing out details or accelerating execution, rather than a substitute for thinking. In this mode, the tool can amplify engagement instead of eroding it.

How to avoid AI brainrot

The most reliable way to avoid AI brainrot is to choose tasks that sit at the edge of your own capabilities. Ideally, they should be slightly beyond what you can comfortably do on your own, but not so far away that you have no meaningful way to contribute. If you do not know how to program a web application, asking AI to build one for you will not make you a developer. If you are unable to write a compelling short story, asking AI to produce a novel will not make you a writer. In such cases, you cannot evaluate, guide, or meaningfully shape the result. Your brain remains disengaged, and the output is almost guaranteed to be slop. It is not because AI is involved, but because a human is not involved.

The productive approach is the opposite. Pick a task you have long wanted to do, one that felt just slightly out of reach, but where you can already see the outline of a solution. Start on your own. Iterate. Bring AI in as an assistant rather than a replacement. Discard unsatisfactory results, refine constraints, and repeat until the outcome aligns with your intent. The effort may be frustrating at times, but that friction is precisely what keeps your mind engaged and allows skills to grow.

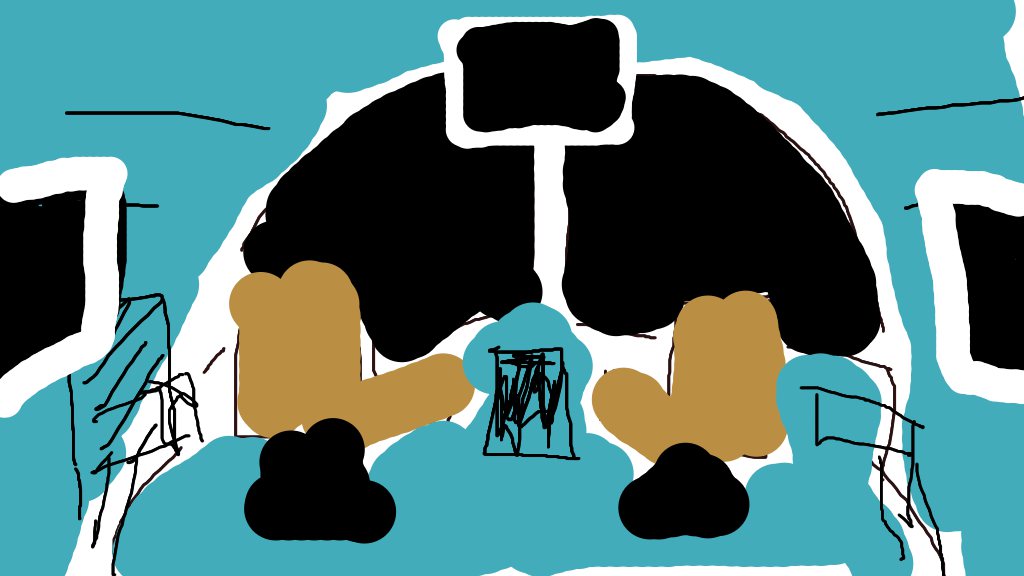

Let me tell you what I did recently. I needed an image of a spaceship cockpit for a text adventure I am working on. I began with a rough sketch of the composition and colors I wanted, then went through many iterations refining prompts, adjusting details, and selectively correcting problem areas in the generated images. The final result, taken on its own, would not qualify as visual art. But that was never the goal. The image exists to set the mood for a specific scene in an interactive story, and for that purpose it works. AI did not replace creativity here; it supported a concrete, human-defined intent, and filled a gap in larger project.

| → |  |

| sketch | final image |

It is also worth noting that slop is not unique to AI. If someone uses a non-AI wizard in the cursor editor to generate a cursor that looks indistinguishable from thousands of others, the result is slop as well. If someone writes "67" in a cursor set review, that may seem funny(?) right now, but is worthless in the long run. Slop emerges when low-effort, low-intent processes are used to flood the world with interchangeable output. AI does not create slop on its own, it produces slop when people instruct it to do so through disengaged use. In doing that, they harm themselves by learning nothing, and they harm others by wasting their time.